Research overview

The CC Lab studies how ideas spread and evolve, mixing data science with theories about human behaviour, culture, and society.

In practice, we develop and apply algorithms to study the media we produce and consume, the beliefs we hold, the stories we tell, the language we use, as well as the opinions, ideas, and narratives we propagate – how they develop, and how they evolve over time.

Sometimes we also study how information is organised in nature itself, in genotypes, phenotypes, bits, bytes, and memories.

In doing so, we combine tools and approaches from computational social science, cultural analytics, data science, natural language processing, mathematical modelling, complex systems, algorithmic information theory, evolutionary biology, cultural evolution, political communication, social psychology, and cognitive psychology.

For more detail, see below. For completed research, go to the Publications tab.

How information is organised

...or how information evolves in nature.

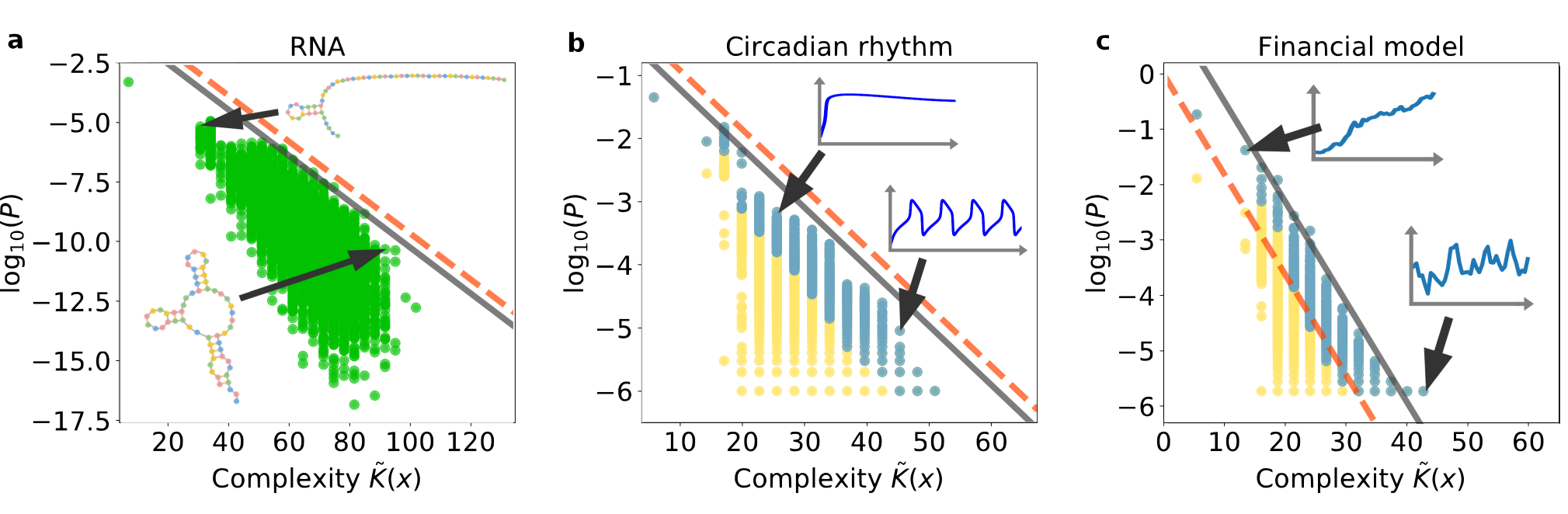

Information processing is everywhere. It is an essential component of anything that has an input and an output: our genotypes and phenotypes shaped by evolution, the computers we build and the algorithms that run on them, and the ways in we organise and classify information. In theoretical computer science, the best description we have of computation comes from algorithmic information theory (AIT), which has been defined as "the result of putting Shannon’s information theory and Turing’s computability theory into a cocktail shaker and shaking vigorously". Despite its very theoretical nature, we are showing that AIT can be applied to explain patterns of complexity in multiple environments, from RNA folding to gene regulatory networks to models of plant growth, from helping understand why neural networks generalise so well, to explaining how evolutionary search is so efficient at finding solutions.

How information is used

...or how information evolves in society.

What makes people change their minds? How do we use the information around us? With the increasing influence of social media on public opinion, understanding how our beliefs change when faced with new information has become an urgent matter. Buzzwords such as "virality", "fake news", and "infodemic" might be useful when talking about it, but the truth is: we still don't know how it all really works. In the CC Lab, we are developing new methods to study how people change their minds, and how our ideas evolve – from the beliefs and opinions we hold, to the stories we tell, to the language we use to tell them.

How information is misused

...or how to change that!

Information can be harmful in many ways. Far from being limited to fake news, misinformation can come in the form of rumours, authentic material in the wrong context, bots and "fake crowds", and several other ways of manipulating public opinion. In the CC Lab, we are studying how information is weaponised in culture wars and propaganda, as well as how social media platforms allow that to happen, whether through biased recommendation algorithms, or by letting misinformation go unchecked. Through this research, our aim is to provide the basis for better, evidence-based policies regarding the online spread of (mis)information.